|

<< Click to Display Table of Contents >> CEQ discussion |

|

|

<< Click to Display Table of Contents >> CEQ discussion |

|

The CEQ has just 10 Likert items.

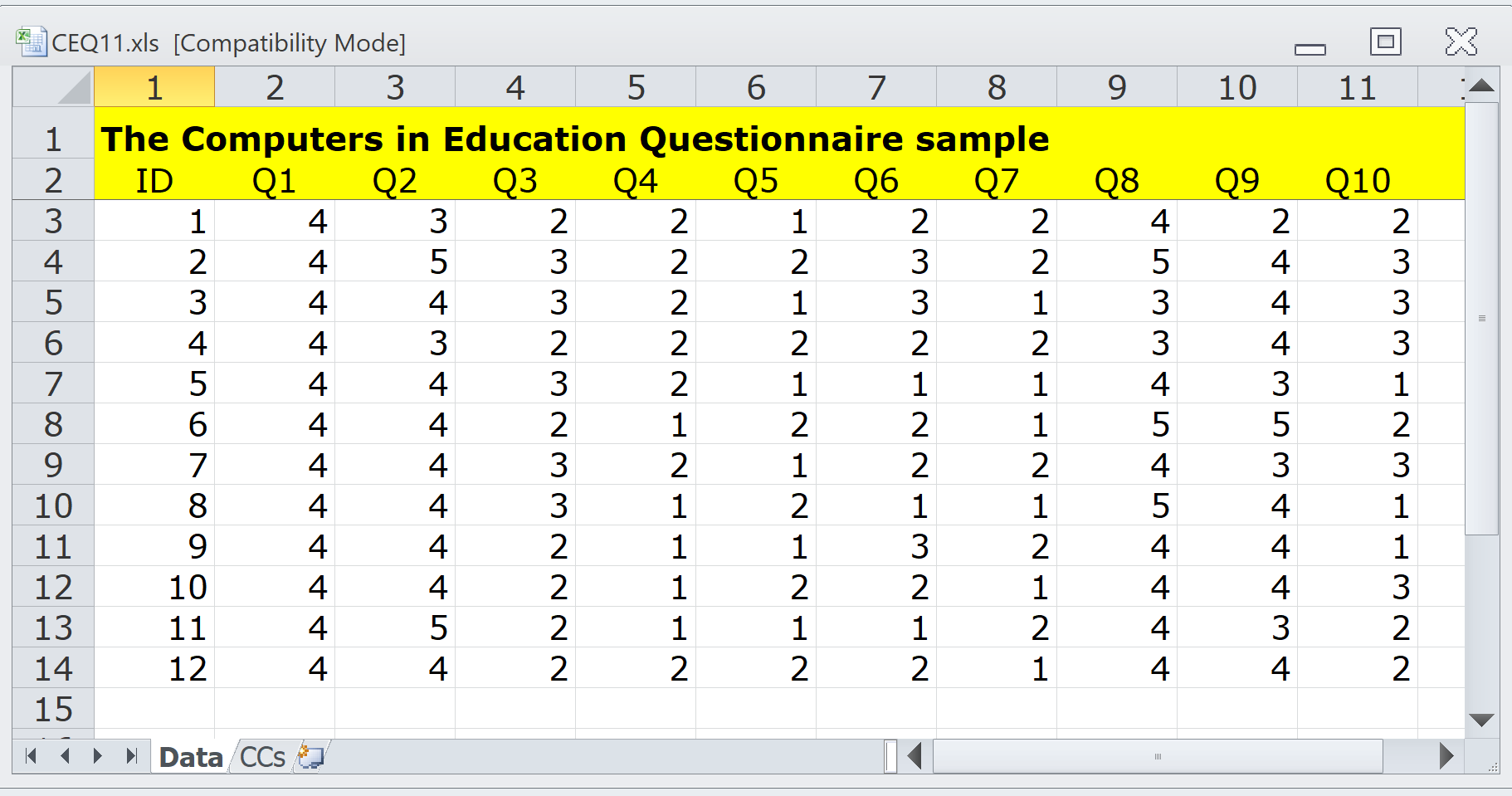

My student recorded the principals' answers in an Excel workbook using these codes:

Strongly disagree=1

Disagree=2

Neither=3

Agree=4

Strongly agree=5

The student then wanted to score the CEQ.

Some of the CEQ items are negatively worded, such as these two:

(6) Computers are a danger because they dehumanize teaching.

| 1 2 3 4 5 |

| (7) | Computers are of little value in the classroom because they are too difficult to use. |

| 1 2 3 4 5 |

Before the student asked Excel to score the principals' responses, she used an instruction in Excel to "reverse" the negatively-worded items so that they became:

Strongly disagree=5

Disagree=4

Neither=3

Agree=2

Strongly agree=1

This is called "reverse scoring". She did it because she wanted to always give 5 points to the most positive response to an item. On some items the most positive response was "strongly disagree".

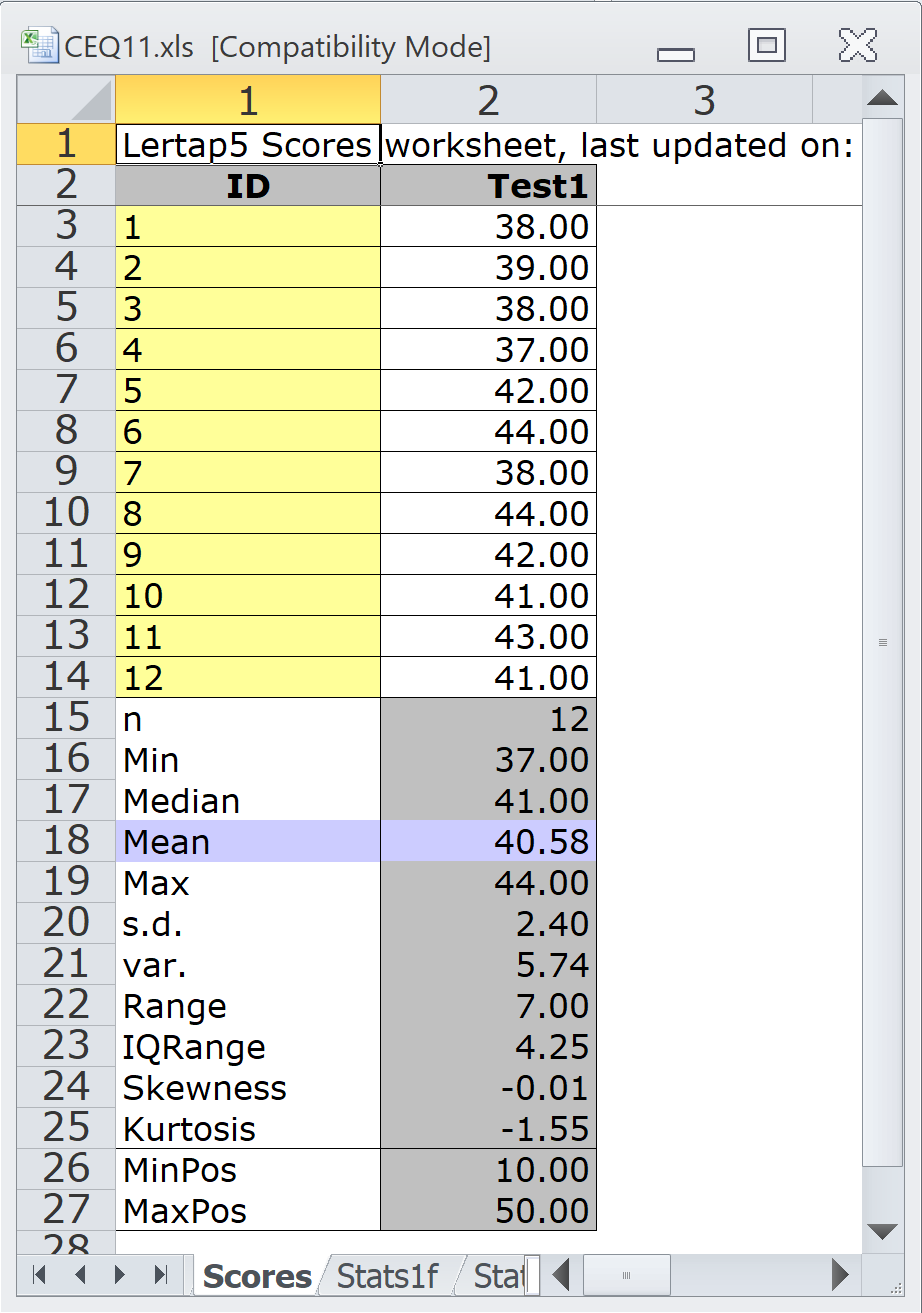

Since there were 10 items, with 1 the lowest score on each item, and 5 the highest, when adding up a principal's responses over all 10 items, the lowest possible score would be 10, the highest possible score would be 50.

Here is what Excel said about the scores for the 12 principals:

There was not much variance in the scores from the 12 principals. The average score was a little over 40, and the range, lowest score to highest score, was just 37 to 44.

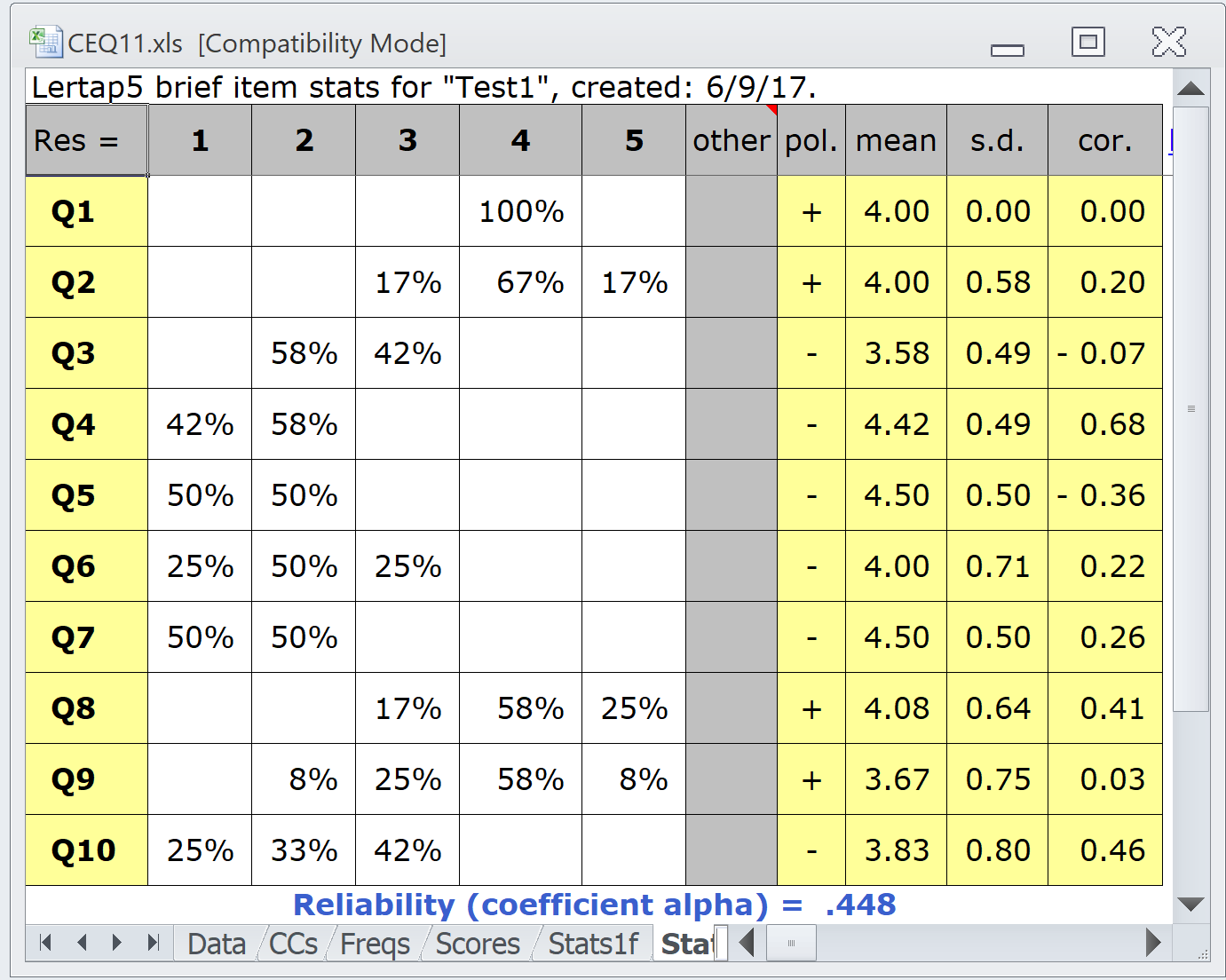

Here are the item results:

The student saw the .448 value for reliability and was, as they say in English, "taken aback". Disappointed.

I told her to go and have a cup of coffee and a donut and relax. This was not the end of the world.

The low reliability figure would suggest error. In this case the most likely source of error would be inconsistencies in the item responses -- it just could be that the 10 items are not all measuring the same construct -- the items themselves may not be "consistent", they may be measuring different attitudes.

What construct? "Attitude to the use of computers in education among high school principals."

Now, let's look at the 10 items again. Can we perhaps figure out what may have gone wrong?

We could make use the "cor." column above. It says that four items, Q1, Q3, Q5, and Q9 do not correlate well with the other items.

What's happening?

Why don't we step back and look, for example, at Q8? This is, I believe, the core of what my student hoped the principals would say: they saw value in the use of computers.

Q1 supports this -- all the principals agreed. They disagreed on Q4, which was a negatively-worded question, so we could say that the principals felt that computers in schools were not just a "luxury".

But why, then, did they show some ambivalence on Q9?

My student was perplexed (confused).

I was also. I told the student that the low reliability figure meant she would not be justified in using the principals' overall scores as an indication of their attitude. Low reliability = the presence of measurement error.

I also told her that she would do well to go out and interview the principals. She did.

She found that some principals were concerned with the popularity of computer games, and felt that teachers would have to carefully watch students to keep them from playing games. Another concern was cost -- while the principals did see value in the use of computers in learning, some said they'd have to make sacrifices somewhere in order to find the funds required to buy computers.

When my student finally wrote up the results of her study, her main conclusion, which I supported, was that principals were quite certain that computers did have value in education; they felt that computers could have a positive impact on learning. But, at the same time, they did have reservations about costs, and about the possible negative influence of computer games. One principal said that he thought computers had "real promise" but he didn't want lots of class time devoted to just teaching how to use computers.

This survey turned out to be successful in the end. My student was able to uncover the principals' attitudes about computers. The low reliability of the questionnaire prohibited her from using the overall survey scores (from a possible 10 to 50) as a "scale". In this she was not happy, but the low reliability, in this case, caused her to go out and dig deeper by using interviews.