Our research questions

Back a few topics, we posed a few “research questions” which we proposed to set about answering. And so we have, or almost so. We’ve looked at both the cognitive and affective subtests, finding out which of the cognitive questions were the most difficult. To determine how the subtest scores looked, we activated the histogrammer.

Did the subtests have adequate reliability? Well, the cognitive subtest, “Knwldge”, came through with an alpha reliability of 0.91, standard error of measurement of 8.1% (or 2.03 in raw score terms), which is not bad. The affective subtest, “Comfort”, fared less well, having an alpha figure of 0.63, which is weak. We’d have to have more of a look at that subtest, as it is right now we would not feel confident, not too comfortable (as it were), in using the scores from this subtest as reliable indicators—for the moment, we might compromise by proposing to look at Comfort results only at the level of the individual items (often not a bad compromise at all).

How did respondent attitudes correspond to how well they did on the cognitive test? The correlation between Knwldge and Comfort was found to be 0.80, which is substantial, perhaps even a bit surprising, given the relatively low reliability of the Comfort scale. We looked at a scatterplot of the two scores, and, although we didn’t say anything at the time, there is a pattern there—people whose Comfort scores were low had low-ish scores on the cognitive test, Knwldge. The very highest Comfort scores, those at 40 and above, also had very high Knwldge scores, with one exception.

We could use Lertap’s support for external-criterion analyses to dig a bit more here, asking for correlations between each of the Comfort items with the overall Knwldge score. We go up to the Lertap tab, to the Run menu, then to the + More option, and, finally, a click on “Use external criterion”. We tell Lertap to use the Knwldge score as the criterion, that is, the score found in column 2 of the Scores worksheet. Then, when presented with the data set’s subtests, we say “No”, we do not want to “work with” the Knwldge subtest, but then say “Yes” when the Comfort subtest shows up.

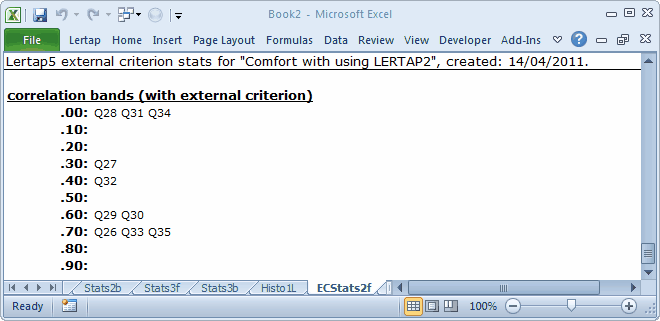

Lertap responds by doing its thing, producing a new report called ECStats2f, with item-level summaries of correlations, and the “correlation bands (with external criterion)”:

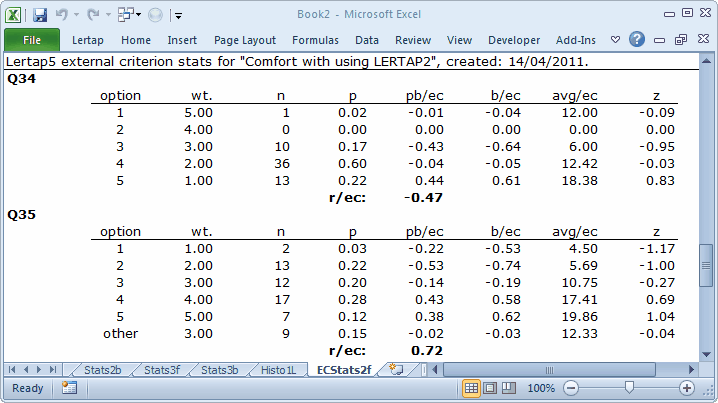

Items Q28, Q31 and Q34 actually had negative correlations with the Knwldge score, something readily visible by just scrolling up to the item-level data in the ECStats2f report. For example, here's a snapshot of Q34 (and Q35), with r/ec for Q34 at -0.47:

(r/ec stands for correlation with external criterion.)

The same three items had the lowest correlations in the ordinary analysis, as shown in the Stats2b and Stats2f reports. There are things to work on here, more questions to answer. Items Q28, Q31, and Q34 are wanting to stand on their own—the results are indicating that these three questions don’t follow the response pattern exhibited by the other seven items in the Comfort subtest.

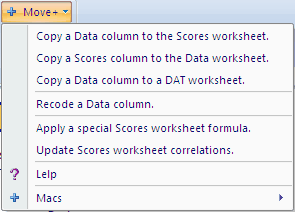

What about a relationship between answers to the Comfort items, and “experience levels”? There are two experience variables in the Data worksheet, recorded in columns 38 and 39. Column 39 indicates the number of years the respondent said s/he’d been using computers. Can we correlate Comfort responses with the data in this column of the Data sheet? Yes, you bet. However, the information in column 39 has to be copied to the Scores worksheet first. Lertap’s tab on the Excel ribbon has a set of Move options; the first of them allows a column in the Data worksheet to be copied and moved to the Scores worksheet. Once this is done, the Run menu is accessed again, and then an “Use external criterion” is requested.

When would you want to use Move’s second option? When would you want to copy a column from the Scores worksheet to the Data worksheet? Well, a common case arises when Lertap’s flexible scoring capabilities are used to form affective test scores, which are then moved back to the Data worksheet. This might be done, for example, if a program such as SPSS were to be used for more complex data analyses.

Here end-ith the Cook's Tour. Hope you enjoyed it. Questions? Just write: support@lertap.com.

Go back to the opening page if you'd like: click.

Tidbits:

For more about external criteria, see the "Using an external criterion" topic in Chapter 8 of the manual.